IaaC Simplified: Amazon EC2 Deployments with GitHub Actions, Terraform, Docker & Amazon ECR

This guide shows how to deploy own Docker apps (with AdminForth as example) to Amazon EC2 instance with Docker and Terraform involving pushing images into Amazon ECR.

Needed resources:

- GitHub actions Free plan which includes 2000 minutes per month (1000 of 2-minute builds per month - more then enough for many projects, if you are not running tests). Extra builds would cost

0.008$per minute. - AWS account where we will auto-spawn EC2 instance. We will use

t3a.smallinstance (2 vCPUs, 2GB RAM) which costs~14$per month inus-east-1region (cheapest region). Also it will take$2per month for EBS gp2 storage (20GB) for EC2 instance. - Also AWS ECR will charge for

$0.09per GB of data egress traffic (from EC2 to the internet) - this needed to load docker build cache.

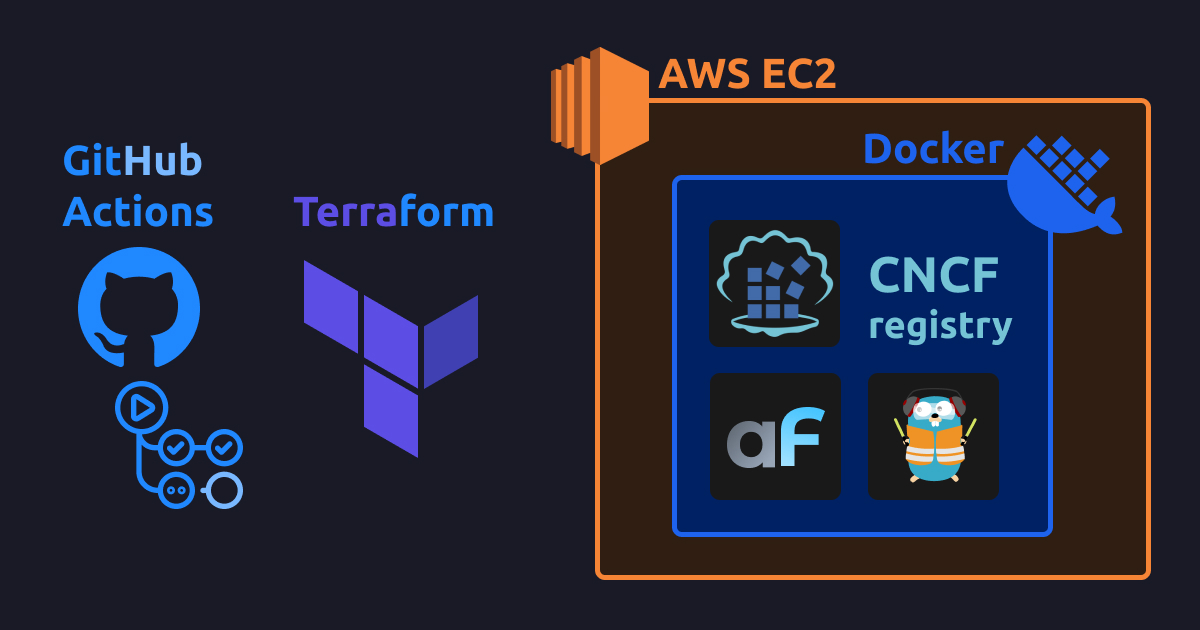

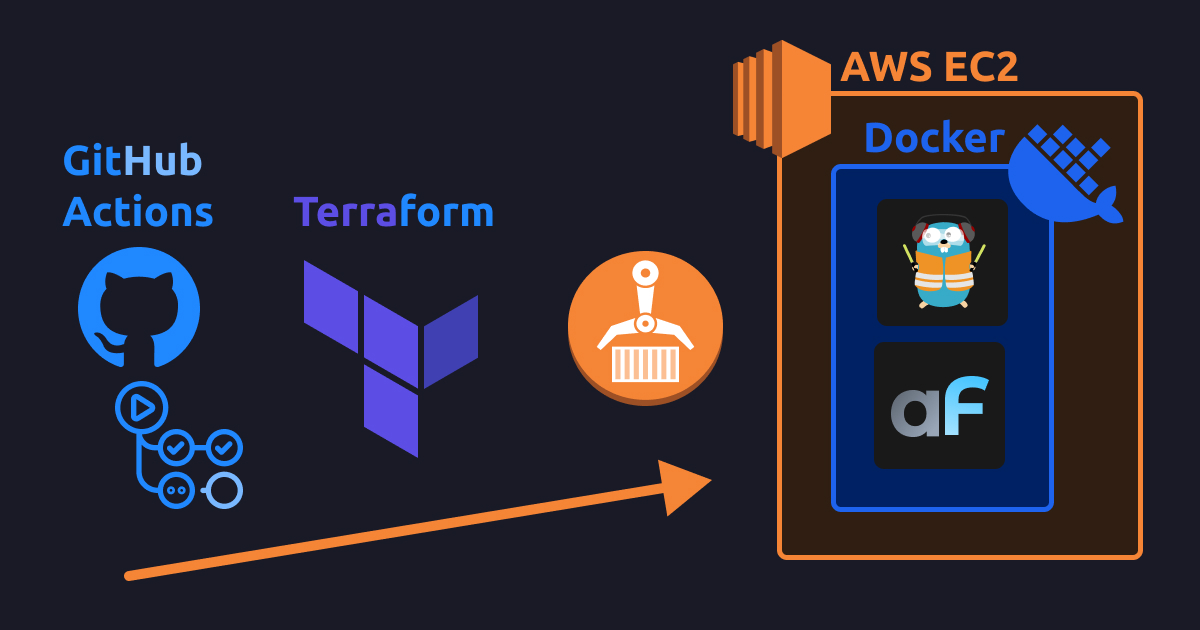

The setup shape:

- Build is done using IaaC approach with HashiCorp Terraform, so almoast no manual actions are needed from you. Every resource including EC2 server instance is described in code which is commited to repo.

- Docker build process is done on GitHub actions server, so EC2 server is not overloaded with builds

- Changes in infrastructure including changing server type, adding S3 Bucket, changing size of sever disk is also can be done by commiting code to repo.

- Docker images and build cache are stored on Amazon ECR

- Total build time for average commit to AdminForth app (with Vite rebuilds) is around 2 minutes.